厦门php网站建设/买外链有用吗

GRU 学习torch官方资料,GRU的讲解

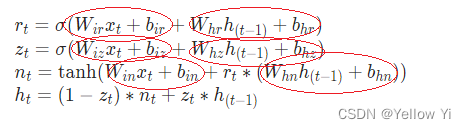

公式中的参数一共可以总结为6个全连接层,weight[“weight_ih_l0”] 的维度为[3 x hidden size,embedding size] , weight[“weight_hh_l0”]的维度为[3 x hidden size,hidden size]在pytorch中 WirW_{ir}WirWizW_{iz}WizWinW_{in}Win被融合在一起,同理WhW_{h}Whr,z,n也被融合在一起。

总参数=3∗((embeddingsize+hiddensize)∗hiddensize+2∗hiddensize)总参数=3*(( embedding size + hidden size)*hidden size + 2* hidden size) 总参数=3∗((embeddingsize+hiddensize)∗hiddensize+2∗hiddensize)

单层

# -*- coding: utf-8 -*-

"""

Created on Wed Aug 18 11:11:08 2021@author: yihuang

"""

embedding_size =10

hidden_size = 15

time_size = 4

rnn = nn.GRU(embedding_size, hidden_size, 1, batch_first = True)

total_params = sum(p.numel() for p in rnn.parameters())

print(total_params)input = torch.randn(1, time_size, embedding_size)

output, hn = rnn(input)

weight = rnn.state_dict()

print(weight["weight_ih_l0"].shape, weight["weight_hh_l0"].shape)def gruFun(Wih, Bih, Whh, Bhh, x, hid):gate_x = torch.matmul(x, Wih.T) + Bihgate_h = torch.matmul(hid, Whh.T) + Bhhi_r, i_i, i_n = gate_x.chunk(3, -1)h_r, h_i, h_n = gate_h.chunk(3, -1)resetgate = torch.sigmoid(i_r + h_r)inputgate = torch.sigmoid(i_i + h_i)newgate = torch.tanh(i_n + (resetgate * h_n))hy = newgate + inputgate * (hid - newgate)return hy in_ = input

h0 = torch.zeros(1,1, hidden_size) # torch.zeros_like(in_[:,0,:])

out = torch.zeros(1,time_size , hidden_size)

for i in range(in_.size(1)):ht = gruFun(weight["weight_ih_l0"], weight["bias_ih_l0"],\weight["weight_hh_l0"], weight["bias_hh_l0"], in_[:,i,:], h0)out[:,i,:] = hth0 = ht

print("output:\n", output)

print("out:\n", out)输出结果

output:tensor([[[ 0.2349, 0.3498, -0.0706, -0.1650, 0.4986, 0.3309, 0.1601,0.5339, 0.0791, 0.6002],[ 0.2641, 0.2583, 0.1358, -0.1170, 0.6451, 0.3311, 0.2608,0.3465, 0.4330, 0.7000],[ 0.3160, 0.3977, 0.1755, 0.0991, 0.7275, 0.0311, -0.0969,0.2958, 0.2427, 0.3704]]], grad_fn=<TransposeBackward1>)

out:tensor([[[ 0.2349, 0.3498, -0.0706, -0.1650, 0.4986, 0.3309, 0.1601,0.5339, 0.0791, 0.6002],[ 0.2641, 0.2583, 0.1358, -0.1170, 0.6451, 0.3311, 0.2608,0.3465, 0.4330, 0.7000],[ 0.3160, 0.3977, 0.1755, 0.0991, 0.7275, 0.0311, -0.0969,0.2958, 0.2427, 0.3704]]])

多层GLU

# -*- coding: utf-8 -*-

"""

Created on Wed Aug 18 11:11:08 2021@author: yihuang

"""

import torch.nn as nn

import torchrnn = nn.GRU(10, 10, 2, batch_first = True)

input = torch.randn(1, 2, 10)

output, hn = rnn(input)

weight = rnn.state_dict()def gruFun(Wih, Bih, Whh, Bhh, x, hid):gate_x = torch.matmul(x, Wih.T) + Bihgate_h = torch.matmul(hid, Whh.T) + Bhhi_r, i_i, i_n = gate_x.chunk(3, -1)h_r, h_i, h_n = gate_h.chunk(3, -1)resetgate = torch.sigmoid(i_r + h_r)inputgate = torch.sigmoid(i_i + h_i)newgate = torch.tanh(i_n + (resetgate * h_n))hy = newgate + inputgate * (hid - newgate)return hy in_ = input

h01 = torch.zeros_like(in_[:,0,:])

h02 = h01

out = torch.zeros_like(in_)

for i in range(in_.size(1)):ht = gruFun(weight["weight_ih_l0"], weight["bias_ih_l0"],\weight["weight_hh_l0"], weight["bias_hh_l0"], in_[:,i,:], h01)h01 = htht = gruFun(weight["weight_ih_l1"], weight["bias_ih_l1"],\weight["weight_hh_l1"], weight["bias_hh_l1"], ht, h02)out[:,i,:] = hth02 = ht

print("output:\n", output)

print("out:\n", out)数据结果

output:tensor([[[ 0.1819, 0.0716, -0.0427, 0.1667, -0.1247, -0.0144, 0.0262,0.1758, 0.0890, 0.1099],[ 0.2991, 0.1910, -0.0414, 0.2278, -0.2381, 0.0801, -0.0465,0.2214, 0.2028, 0.1974]]], grad_fn=<TransposeBackward1>)

out:tensor([[[ 0.1819, 0.0716, -0.0427, 0.1667, -0.1247, -0.0144, 0.0262,0.1758, 0.0890, 0.1099],[ 0.2991, 0.1910, -0.0414, 0.2278, -0.2381, 0.0801, -0.0465,0.2214, 0.2028, 0.1974]]])

参考:

https://blog.csdn.net/u012348774/article/details/109293450