网站怎么做网络推广/淘宝seo优化怎么做

Nvidia Video Codec SDK——AppDec解析

- 项目框架

- AppDec

- 硬解码整体框架

- 主函数main

- 具体硬解码流程函数DecodeMediaFile

- 初始化解码器

- 实际解码函数Decode

- 回调函数HandlePictureDisplay

- 数据指针

- 参考链接

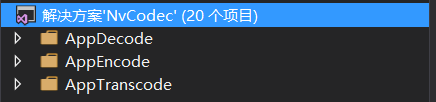

项目框架

使用的SDK版本是Video_Codec_SDK_8.2.16,下载链接:NVIDIA VIDEO CODEC SDK 8.2.16.zip

项目结构如下:

AppDecode:视频源硬解码

AppEncode:视频编码

AppTranscode:转换编码格式

本篇研究的是硬解码工程AppDec

AppDec

硬解码整体框架

主函数main

/**

* This sample application illustrates the demuxing and decoding of media file with

* resize and crop of the output image. The application supports both planar (YUV420P and YUV420P16)

* and non-planar (NV12 and P016) output formats.

*/int main(int argc, char **argv)

{char szInFilePath[256] = "D:/H265/video/KiteFlite_3840x1920_0tile_22_0.h265", szOutFilePath[256] = "";bool bOutPlanar = true;int iGpu = 0;Rect cropRect = {};Dim resizeDim = {};try{//按命令行参数读取输入文件等,例如text.h265ParseCommandLine(argc, argv, szInFilePath, szOutFilePath, bOutPlanar, iGpu, cropRect, resizeDim);CheckInputFile(szInFilePath);if (!*szOutFilePath) {sprintf(szOutFilePath, bOutPlanar ? "out.planar" : "out.native");}//初始化cuda环境ck(cuInit(0));int nGpu = 0;ck(cuDeviceGetCount(&nGpu));if (iGpu < 0 || iGpu >= nGpu) {std::cout << "GPU ordinal out of range. Should be within [" << 0 << ", " << nGpu - 1 << "]" << std::endl;return 1;}CUdevice cuDevice = 0;ck(cuDeviceGet(&cuDevice, iGpu));char szDeviceName[80];ck(cuDeviceGetName(szDeviceName, sizeof(szDeviceName), cuDevice));std::cout << "GPU in use: " << szDeviceName << std::endl;//设置cuda上下文CUcontext cuContext = NULL;ck(cuCtxCreate(&cuContext, 0, cuDevice));//进行解码std::cout << "Decode with demuxing." << std::endl;DecodeMediaFile(cuContext, szInFilePath, szOutFilePath, bOutPlanar, cropRect, resizeDim);}catch (const std::exception& ex){std::cout << ex.what();exit(1);}return 0;

}

具体硬解码流程函数DecodeMediaFile

void DecodeMediaFile(CUcontext cuContext, const char *szInFilePath, const char *szOutFilePath, bool bOutPlanar,const Rect &cropRect, const Dim &resizeDim)

{//输出std::ofstream fpOut(szOutFilePath, std::ios::out | std::ios::binary);if (!fpOut){std::ostringstream err;err << "Unable to open output file: " << szOutFilePath << std::endl;throw std::invalid_argument(err.str());}//解析输入的文件,FFmpegDemuxer是对FFmpeg封装的一个解析文件的类FFmpegDemuxer demuxer(szInFilePath);//创建硬解码器,设置了三个重要的回调函数;第三个参数为bUseDeviceFrame,决定是否使用显卡内存,是的话解码出的数据不转到CPU中NvDecoder dec(cuContext, demuxer.GetWidth(), demuxer.GetHeight(), false, FFmpeg2NvCodecId(demuxer.GetVideoCodec()), NULL, false, false, &cropRect, &resizeDim);int nVideoBytes = 0, nFrameReturned = 0, nFrame = 0;uint8_t *pVideo = NULL, **ppFrame;int x = 2;do {// Demux 解析,获得每一帧码流的数据存在pVideo中,nVideoBytes为数据的字节数//Demux将pVideo存储的地址值改变为pkt.data,即改变了pVideo指向的地址!!!demuxer.Demux(&pVideo, &nVideoBytes);//实际解码进入函数dec.Decode(pVideo, nVideoBytes, &ppFrame, &nFrameReturned);if (!nFrame && nFrameReturned)LOG(INFO) << dec.GetVideoInfo();// This function is used to print information about the video stream//硬解码是一个异步过程,nFrameReturned表示解码得到了多少帧for (int i = 0; i < nFrameReturned; i++) {if (bOutPlanar) {//转换格式ConvertToPlanar(ppFrame[i], dec.GetWidth(), dec.GetHeight(), dec.GetBitDepth());}//写文件,GetFrameSize: get the current frame size based on pixel formatfpOut.write(reinterpret_cast<char*>(ppFrame[i][2]), dec.GetFrameSize());}nFrame += nFrameReturned;} while (nVideoBytes);std::cout << "Total frame decoded: " << nFrame << std::endl<< "Saved in file " << szOutFilePath << " in "<< (dec.GetBitDepth() == 8 ? (bOutPlanar ? "iyuv" : "nv12") : (bOutPlanar ? "yuv420p16" : "p016"))<< " format" << std::endl;fpOut.close();

}

初始化解码器

//初始化解码器的代码

NvDecoder::NvDecoder(CUcontext cuContext, int nWidth, int nHeight, bool bUseDeviceFrame, cudaVideoCodec eCodec, std::mutex *pMutex,bool bLowLatency, bool bDeviceFramePitched, const Rect *pCropRect, const Dim *pResizeDim, int maxWidth, int maxHeight) :m_cuContext(cuContext), m_bUseDeviceFrame(bUseDeviceFrame), m_eCodec(eCodec), m_pMutex(pMutex), m_bDeviceFramePitched(bDeviceFramePitched),m_nMaxWidth (maxWidth), m_nMaxHeight(maxHeight)

{if (pCropRect) m_cropRect = *pCropRect;if (pResizeDim) m_resizeDim = *pResizeDim;//This API is used to create CtxLock objectNVDEC_API_CALL(cuvidCtxLockCreate(&m_ctxLock, cuContext));//CUVIDPARSERPARAMS:该接口用来创建VideoParser//主要参数是设置三个回调函数,实现对解析出来的数据的处理CUVIDPARSERPARAMS videoParserParameters = {}; //结构体videoParserParameters.CodecType = eCodec; //解码视频类型,如H264videoParserParameters.ulMaxNumDecodeSurfaces = 1; //解码表面的最大数量(解析器将循环遍历这些表面)videoParserParameters.ulMaxDisplayDelay = bLowLatency ? 0 : 1;videoParserParameters.pUserData = this;//三个回调函数videoParserParameters.pfnSequenceCallback = HandleVideoSequenceProc;//解码序列时调用videoParserParameters.pfnDecodePicture = HandlePictureDecodeProc;//准备开始解码时调用videoParserParameters.pfnDisplayPicture = HandlePictureDisplayProc;//解码出数据调用if (m_pMutex) m_pMutex->lock();//m_pMutex互斥量,0表示解锁//Create video parser object and initializeNVDEC_API_CALL(cuvidCreateVideoParser(&m_hParser, &videoParserParameters));if (m_pMutex) m_pMutex->unlock();

}

实际解码函数Decode

//实际进行解码的函数Decode,码流在*pData中

bool NvDecoder::Decode(const uint8_t *pData, int nSize, uint8_t ***pppFrame, int *pnFrameReturned, uint32_t flags, int64_t **ppTimestamp, int64_t timestamp, CUstream stream)

{if (!m_hParser){NVDEC_THROW_ERROR("Parser not initialized.", CUDA_ERROR_NOT_INITIALIZED);return false;}m_nDecodedFrame = 0;//AVPacket转CUVIDSOURCEDATAPACKET,并交给cuvidParaseVideoData进行CUVIDSOURCEDATAPACKET packet = {0}; packet.payload = pData; //指向数据包有效载荷数据的指针packet.payload_size = nSize; //负载中的字节数packet.flags = flags | CUVID_PKT_TIMESTAMP;packet.timestamp = timestamp;//判断是否是stream的最后一个packetif (!pData || nSize == 0) {packet.flags |= CUVID_PKT_ENDOFSTREAM;}m_cuvidStream = stream;if (m_pMutex) m_pMutex->lock(); //解码要加锁NVDEC_API_CALL(cuvidParseVideoData(m_hParser, &packet));if (m_pMutex) m_pMutex->unlock();//解锁m_cuvidStream = 0;//检测是否解码的帧数大于0了if (m_nDecodedFrame > 0){if (pppFrame) {m_vpFrameRet.clear(); //将返回的列队清空std::lock_guard<std::mutex> lock(m_mtxVPFrame);// 将m_vpFrame传给m_vpFrameRet;m_vpFrameRet.insert(m_vpFrameRet.begin(), m_vpFrame.begin(), m_vpFrame.begin() + m_nDecodedFrame);*pppFrame = &m_vpFrameRet[0]; //pppFrame里存储的地址指向了m_vpFrameRet向量的起始位置}if (ppTimestamp) {*ppTimestamp = &m_vTimestamp[0];}}if (pnFrameReturned){*pnFrameReturned = m_nDecodedFrame;}return true;

}

回调函数HandlePictureDisplay

博主的主要目的是找到解码后数据在显存中的位置以及指针的指向,因此重点看了HandlePictureDisplay函数

/* Return value from HandlePictureDisplay() are interpreted as:

* 0: fail, >=1: suceeded

*/

int NvDecoder::HandlePictureDisplay(CUVIDPARSERDISPINFO *pDispInfo) {CUVIDPROCPARAMS videoProcessingParameters = {};videoProcessingParameters.progressive_frame = pDispInfo->progressive_frame;videoProcessingParameters.second_field = pDispInfo->repeat_first_field + 1;videoProcessingParameters.top_field_first = pDispInfo->top_field_first;videoProcessingParameters.unpaired_field = pDispInfo->repeat_first_field < 0;videoProcessingParameters.output_stream = m_cuvidStream;CUdeviceptr dpSrcFrame = 0;unsigned int nSrcPitch = 0;//cuvidMapVideoFrame:返回cuda设备指针和视频帧的Pitch// MapVideoFrame: 拿到解码后数据在显存的指针 --> dpSrcFrameNVDEC_API_CALL(cuvidMapVideoFrame(m_hDecoder, pDispInfo->picture_index, &dpSrcFrame,&nSrcPitch, &videoProcessingParameters));CUVIDGETDECODESTATUS DecodeStatus;memset(&DecodeStatus, 0, sizeof(DecodeStatus));CUresult result = cuvidGetDecodeStatus(m_hDecoder, pDispInfo->picture_index, &DecodeStatus);if (result == CUDA_SUCCESS && (DecodeStatus.decodeStatus == cuvidDecodeStatus_Error || DecodeStatus.decodeStatus == cuvidDecodeStatus_Error_Concealed)){printf("Decode Error occurred for picture %d\n", m_nPicNumInDecodeOrder[pDispInfo->picture_index]);}uint8_t *pDecodedFrame = nullptr;{// lock_guard 自动解锁 当控件离开lock_guard创建对象的范围时,lock_guard被破坏并释放互斥体std::lock_guard<std::mutex> lock(m_mtxVPFrame);// 解出一帧 m_nDecodedFrame+1,且若不够空间了,则开辟空间if ((unsigned)++m_nDecodedFrame > m_vpFrame.size()){// Not enough frames in stockm_nFrameAlloc++;uint8_t *pFrame = NULL;//m_bUseDeviceFrame 初始化解码器的时候设置的,是否使用显卡内存,是得解码出来的数据不转到CPU内存if (m_bUseDeviceFrame){//Pushes the given context \p ctx onto the CPU thread's stack of current contexts.//The specified context becomes the CPU thread's current context, so all CUDA functions that operate on the current context are affected.CUDA_DRVAPI_CALL(cuCtxPushCurrent(m_cuContext)); //分配显存空间,并返回指向该空间的指针pFrameif (m_bDeviceFramePitched){CUDA_DRVAPI_CALL(cuMemAllocPitch((CUdeviceptr *)&pFrame, &m_nDeviceFramePitch, m_nWidth * (m_nBitDepthMinus8 ? 2 : 1), m_nHeight * 3 / 2, 16));}else {//GetFrameSize() is used to get the current frame size based on pixel formatCUDA_DRVAPI_CALL(cuMemAlloc((CUdeviceptr *)&pFrame, GetFrameSize()));}CUDA_DRVAPI_CALL(cuCtxPopCurrent(NULL));}else //CPU内存{pFrame = new uint8_t[GetFrameSize()]; // 开辟空间}m_vpFrame.push_back(pFrame);}pDecodedFrame = m_vpFrame[m_nDecodedFrame - 1]; // 取到最后一个}CUDA_DRVAPI_CALL(cuCtxPushCurrent(m_cuContext)); // 启用contextCUDA_MEMCPY2D m = { 0 };m.srcMemoryType = CU_MEMORYTYPE_DEVICE;m.srcDevice = dpSrcFrame;//解码后数据在显存的指针m.srcPitch = nSrcPitch;m.dstMemoryType = m_bUseDeviceFrame ? CU_MEMORYTYPE_DEVICE : CU_MEMORYTYPE_HOST;m.dstDevice = (CUdeviceptr)(m.dstHost = pDecodedFrame);m.dstPitch = m_nDeviceFramePitch ? m_nDeviceFramePitch : m_nWidth * (m_nBitDepthMinus8 ? 2 : 1);m.WidthInBytes = m_nWidth * (m_nBitDepthMinus8 ? 2 : 1);m.Height = m_nHeight;CUDA_DRVAPI_CALL(cuMemcpy2DAsync(&m, m_cuvidStream));m.srcDevice = (CUdeviceptr)((uint8_t *)dpSrcFrame + m.srcPitch * m_nSurfaceHeight);m.dstDevice = (CUdeviceptr)(m.dstHost = pDecodedFrame + m.dstPitch * m_nHeight);m.Height = m_nHeight / 2;// 解码完成,NV12格式 pDecodedFrame// NV12TORGBACUDA_DRVAPI_CALL(cuMemcpy2DAsync(&m, m_cuvidStream));CUDA_DRVAPI_CALL(cuStreamSynchronize(m_cuvidStream));CUDA_DRVAPI_CALL(cuCtxPopCurrent(NULL)); // 拷贝结束,取消上下文if ((int)m_vTimestamp.size() < m_nDecodedFrame) {m_vTimestamp.resize(m_vpFrame.size());}m_vTimestamp[m_nDecodedFrame - 1] = pDispInfo->timestamp;//取消映射先前映射的视频帧NVDEC_API_CALL(cuvidUnmapVideoFrame(m_hDecoder, dpSrcFrame));return 1;

}

数据指针

解码后数据在内存地址由指针**ppFrame指向

遍历所有像素点YUV的值:

void DecodeMediaFile(CUcontext cuContext, const char *szInFilePath, const char *szOutFilePath, bool bOutPlanar,const Rect &cropRect, const Dim &resizeDim)

{//输出std::ofstream fpOut(szOutFilePath, std::ios::out | std::ios::binary);if (!fpOut){std::ostringstream err;err << "Unable to open output file: " << szOutFilePath << std::endl;throw std::invalid_argument(err.str());}//解析输入的文件,FFmpegDemuxer是对FFmpeg封装的一个解析文件的类FFmpegDemuxer demuxer(szInFilePath);//创建硬解码器,设置了三个重要的回调函数;第三个参数为bUseDeviceFrame,决定是否使用显卡内存,是的话解码出的数据不转到CPU中NvDecoder dec(cuContext, demuxer.GetWidth(), demuxer.GetHeight(), false, FFmpeg2NvCodecId(demuxer.GetVideoCodec()), NULL, false, false, &cropRect, &resizeDim);int nVideoBytes = 0, nFrameReturned = 0, nFrame = 0;uint8_t *pVideo = NULL, **ppFrame;int x = 2;do {// Demux 解析,获得每一帧码流的数据存在pVideo中,nVideoBytes为数据的字节数//Demux将pVideo存储的地址值改变为pkt.data,即改变了pVideo指向的地址!!!demuxer.Demux(&pVideo, &nVideoBytes);//实际解码进入函数dec.Decode(pVideo, nVideoBytes, &ppFrame, &nFrameReturned);if (!nFrame && nFrameReturned)LOG(INFO) << dec.GetVideoInfo();// This function is used to print information about the video stream//硬解码是一个异步过程,nFrameReturned表示解码得到了多少帧for (int i = 0; i < nFrameReturned; i++) {if (bOutPlanar) {//转换格式ConvertToPlanar(ppFrame[i], dec.GetWidth(), dec.GetHeight(), dec.GetBitDepth());}//遍历所有像素点的YUV值并输出for (int j = 0; j < dec.GetWidth()*dec.GetHeight()*3/2; j++) {printf(" %d, ", reinterpret_cast<uint8_t*>(ppFrame[i][j]));}//写文件,GetFrameSize: get the current frame size based on pixel formatfpOut.write(reinterpret_cast<char*>(ppFrame[i][2]), dec.GetFrameSize());}nFrame += nFrameReturned;} while (nVideoBytes);std::cout << "Total frame decoded: " << nFrame << std::endl<< "Saved in file " << szOutFilePath << " in "<< (dec.GetBitDepth() == 8 ? (bOutPlanar ? "iyuv" : "nv12") : (bOutPlanar ? "yuv420p16" : "p016"))<< " format" << std::endl;fpOut.close();

}

参考链接

NIVIDIA 硬解码学习1

NIVIDIA 硬解码学习2

【视频开发】Nvidia硬解码总结