......纪第一个Python爬虫,

首发于本人同名博客园......

"""

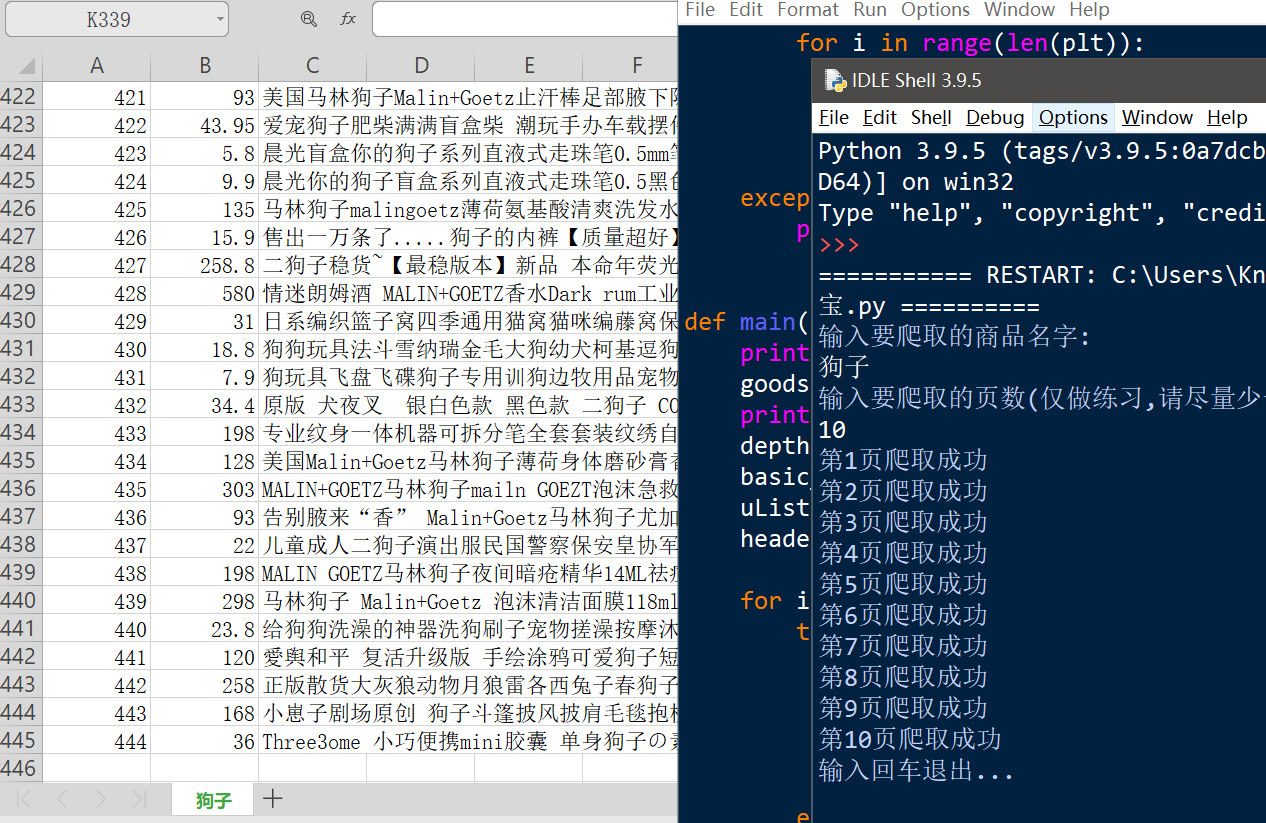

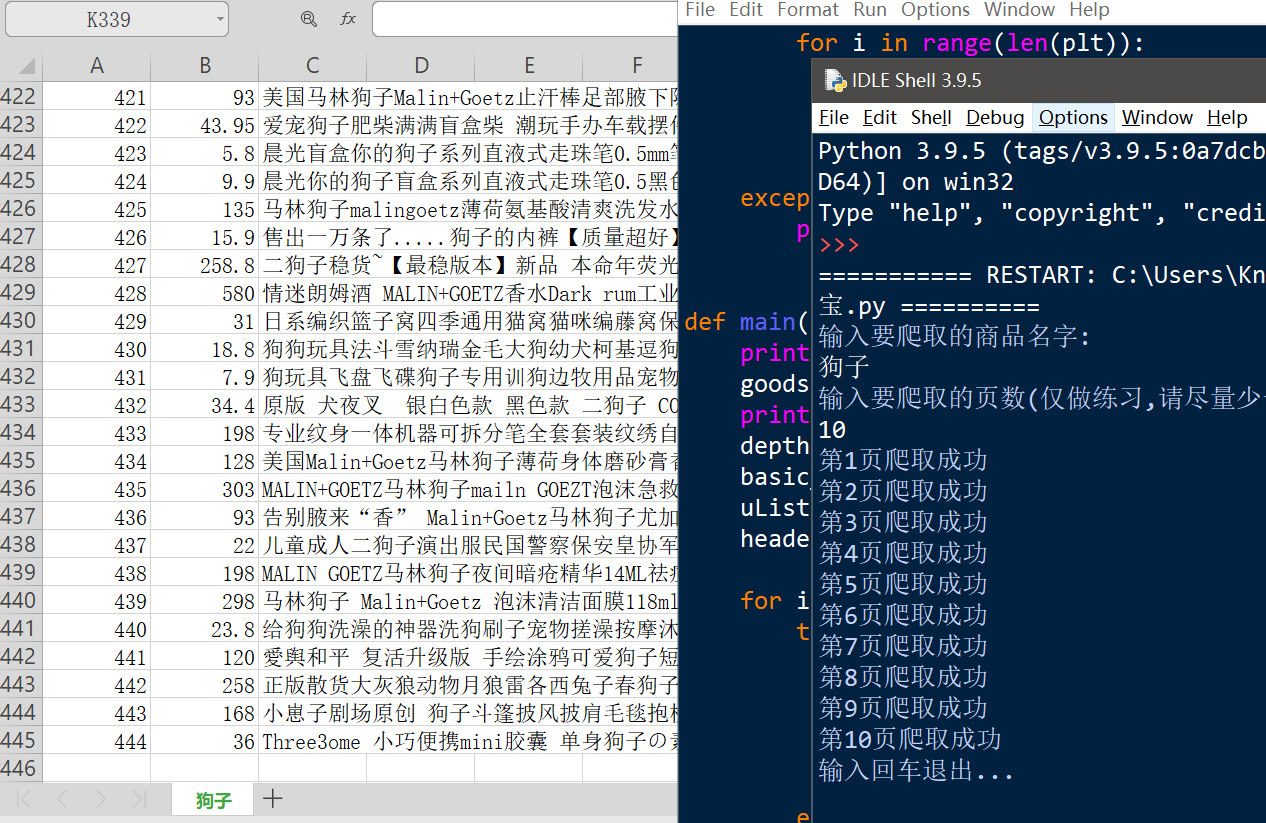

Created on Thu Jun 10 21:42:17 2021@author: 泥烟本爬虫可实现指定商品,指定页数的信息爬取,仅用来学习

具有时效性(cookie会过期,用的时候手动改一下即可)

"""import requests

import re

import csv

import timecount=1

#步骤1:提交商品搜索请求,循环获取页面

def getHTMLText(url):me = {'cookie':'略','User-agent':'Mozilla/5.0'}try:r = requests.get(url, headers=me,timeout=30)r.raise_for_status()r.encoding = r.apparent_encodingreturn r.textexcept:return ""#步骤2:对于每个页面,提取商品序号,名称和价格信息

def parsePage(ilt, html,page):try:plt = re.findall(r'\"view_price\"\:\"[\d\.]*\"', html)tlt = re.findall(r'\"raw_title\"\:\".*?\"', html)#每页第一个商品的序号global countfor i in range(len(plt)):price = eval(plt[i].split(':')[1])title = eval(tlt[i].split(':')[1])ilt.append([count,price, title])count+=1except:print("")def main():print('输入要爬取的商品名字:')goods = input()print("输入要爬取的页数(仅做练习,请尽量少于10页):")depth = int(input())basic_url = 'https://s.taobao.com/search?q=' + goodsuList = []header = ["序号", "价格", "商品名称"]for i in range(depth):try:url = basic_url + '&s=' + str(44 * i)html = getHTMLText(url)parsePage(uList, html,i)print("第"+str(i+1)+"页爬取成功")time.sleep(0.5)except:continuefilename = goods+".csv"#步骤3:将信息保存在文件中,文件名为该商品的名字with open(filename, 'a', newline='') as f:writer = csv.writer(f)writer.writerow(header)for row in uList:writer.writerow(row)if __name__ == '__main__':main()print("输入回车退出...")input()