南京企业网站做优化/网域名查询地址

- 相关

- 【笔记】Docker 基础(概念、镜像命令、容器命令、…) - https://blog.csdn.net/LawssssCat/article/details/104009903

网络配置的需求

- 容器内容器外通信

- 容器间通信

文章目录

- 网络架构

- 网络模式

- # bridge

- # host

- # none

- # container

- 自定义网络(user-defined网络)

- # --link(过时)

- # --network 自定义网络

- 问题: 无法联通两个网络

- 解决: 联通两个网络 connect

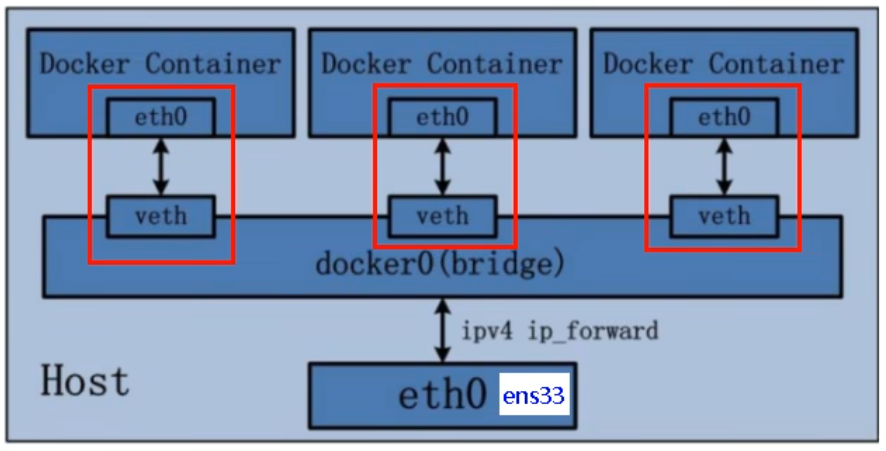

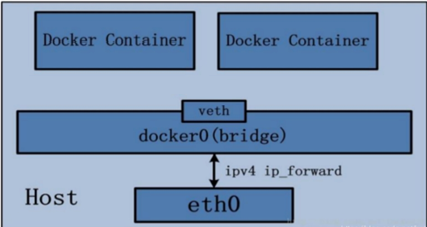

网络架构

docker0 虚拟网桥

我们只要安装了docker,就会有一个网卡docker0桥接模式。每启动一个docker容器,docker0就会给容器分配一个ip,使用的时veth-pair技术。(veth=virtual eth,eth=ether 以太)

veth-pair就是一对的虚拟设备接口,他们都是成对出现的,它们充当一个桥梁,连接各种虚拟设备。💡OpenStac、Docker容器之间的连接、OVS的连接都是使用的这个技术

示意图:

e.g.

| 宿主机(host) | 容器(tomca1) | 容器(tomcat2) | |

|---|---|---|---|

| 虚拟网卡 | docker0 | vethc7a3a84 | vethde49a31 |

| ip | 172.17.0.1 | 172.17.0.2 | 172.17.0.3 |

| 网关 | 172.17.0.1 | 172.17.0.1 |

-

安装 https://blog.csdn.net/LawssssCat/article/details/104009903#docker-install

-

查看新增的网卡(docker0为docker在桥接模式下的虚拟网卡)

$ ifconfig | grep docker -A 10 docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255inet6 fe80::42:27ff:fe72:addf prefixlen 64 scopeid 0x20<link>ether 02:42:27:72:ad:df txqueuelen 0 (Ethernet)RX packets 13586 bytes 844307 (844.3 KB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 19150 bytes 106002914 (106.0 MB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 -

创建并运行两个容器

$ docker run -d --name tomcat01 tomcat $ docker run -d --name tomcat02 tomcat $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES daadf8ee336f tomcat "catalina.sh run" 2 minutes ago Up 2 minutes 8080/tcp tomcat02 92009f85260e tomcat "catalina.sh run" 2 minutes ago Up 2 minutes 8080/tcp tomcat01 -

使用ifconfig查看,右增加了两个网卡。

💡这两个虚拟网卡是给容器用的。(这也体现了容器用的其实是宿主机的资源)vethc7a3a84: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet6 fe80::6488:a8ff:fe61:1082 prefixlen 64 scopeid 0x20<link>ether 66:88:a8:61:10:82 txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 6 bytes 516 (516.0 B)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0vethde49a31: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet6 fe80::bc0d:a6ff:fecc:b40f prefixlen 64 scopeid 0x20<link>ether be:0d:a6:cc:b4:0f txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 13 bytes 1102 (1.1 KB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0怎么认证容器使用了这两个虚拟网卡呢?通过inspect指令

-

通过inspect指令查看容器信息。通过对比mac地址就能确定容器用的确实是虚拟出来的那两个网卡

tomcat01

$ docker inspect tomcat01 -f "{{json .NetworkSettings.Networks}}" | jq {"bridge": {"IPAMConfig": null,"Links": null,"Aliases": null,"NetworkID": "1a4165d570d1afdbdda155ab9fb8301d1a98bdf93c6d55b7dbe4f165f9cea071","EndpointID": "db17654a4d8c6400f69393b608429389fbc0194452fa38ccb94da1689165faa5","Gateway": "172.17.0.1", <------------------- ⚠️网关是docker0的ip地址"IPAddress": "172.17.0.2", "IPPrefixLen": 16,"IPv6Gateway": "","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"MacAddress": "02:42:ac:11:00:02", <------------- ⚠️mac地址与新虚拟出来的网卡vethc7a3a84的mac地址一致(ether)"DriverOpts": null} }tomcat02同理

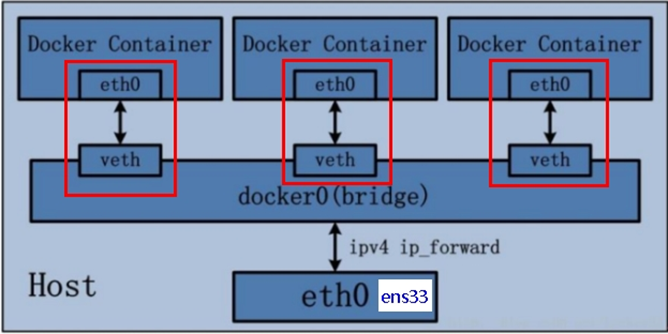

网络模式

都说docker可以创建成千上百个容器,但是通过上面的例子,我们发现: 每创建一个容器需要创建一个虚拟网卡。这自觉上就是非常不优雅的。

因此docker提供了几种网络模式:

| 网络模式 | 命令 | 简介 |

|---|---|---|

| bridge (默认) | --network bridge | 为每个容器分配一个虚拟网卡(拥有自己独立的IP、端口),以docker0(虚拟网桥)作为网关 |

| host | --network host | 直接使用宿主机的IP、端口(💡即没有自己独立的network namespace、独立的网卡、IP、网关地址) |

| none | --network none | 没有任何网络设置 【给不联网容器使用】 |

| container | --network container:NAME/ID | 需要指定一个容器,和该容器共享IP、端口号范围等 |

下面通过ifconfig、docker inspect查看各种模式的网络配置

# bridge

创建容器

# docker run -d --name t1 --network bridge busybox sleep infinity

$ docker run -d --name t1 busybox sleep infinity

e44bcbfdf7aba2a951b490a2f45dc0715fe709a2af15027efd35bea59d7be92b

宿主机网卡变化: 生成一个名为veth9f9ad4b的虚拟网卡

$ ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255inet6 fe80::42:81ff:febf:798a prefixlen 64 scopeid 0x20<link>ether 02:42:81:bf:79:8a txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 7 bytes 626 (626.0 B)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0veth9f9ad4b: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet6 fe80::c4b4:caff:fefe:f43e prefixlen 64 scopeid 0x20<link>ether c6:b4:ca:fe:f4:3e txqueuelen 0 (Ethernet)RX packets 0 bytes 0 (0.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 8 bytes 696 (696.0 B)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

容器信息

$ docker inspect t1 --format "{{json .NetworkSettings.Networks}}" | jq

{"bridge": {"IPAMConfig": null,"Links": null,"Aliases": null,"NetworkID": "1a4165d570d1afdbdda155ab9fb8301d1a98bdf93c6d55b7dbe4f165f9cea071","EndpointID": "17780b020d4e9b34625350c44629f6dba16a290a35da21b602d12ea97bf77597","Gateway": "172.17.0.1", <------------- ⚠️指定了网关"IPAddress": "172.17.0.2", <------------- ⚠️提供了IP"IPPrefixLen": 16,"IPv6Gateway": "","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"MacAddress": "02:42:ac:11:00:02", <------------- ⚠️提供了网卡(网卡用可mac地址唯一标识)"DriverOpts": null}

}

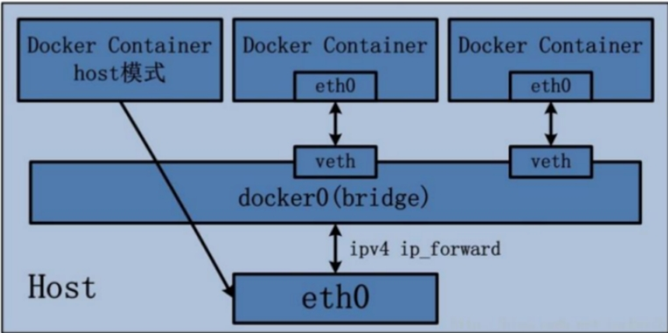

# host

创建容器

$ docker run -d --name t2 --network host busybox sleep infinity

add1e7c8cec027c879ac53ece220438980a5db555d8a6ba9314d86df95c373f6

查看容器信息也没有显示什么IP、网关、mac地址了

$ docker inspect t2 --format "{{json .NetworkSettings.Networks}}" | jq

{"host": {"IPAMConfig": null,"Links": null,"Aliases": null,"NetworkID": "5db86cb4951a73b2f745de0387afeccd8c8d54c00bf0f915b6cb8f5e779d03c7","EndpointID": "6aaaaec8926a4e9224711981161dcbdee6e2d63160cdaa228ee6b517023557d1","Gateway": "","IPAddress": "","IPPrefixLen": 0,"IPv6Gateway": "","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"MacAddress": "","DriverOpts": null}

}

但是,我们在容器内部查看网卡信息,可以发现和宿主机是一样的

# 宿主机网卡情况

$ ifconfig

# 容器内部网卡情况

$ docker exec -it t2 ifconfig

# none

创建容器

$ docker run -d --name t3 --network none busybox sleep infinity

803aaad0fd2798cd0b61284501408a0bee4bf48080020807e6f0073e36b8738f

查看容器信息,发现和host模式是一样的,没有配置

$ docker inspect t3 --format "{{json .NetworkSettings.Networks}}" | jq

{"none": {"IPAMConfig": null,"Links": null,"Aliases": null,"NetworkID": "a3dae9dcd74bb14f92e2ea80f2a559faefe18f12471b2f8fca6dbe255952ded8","EndpointID": "b13562e75470c080b0d1b93084c60d96b7bd08cd276b24767b43590989043633","Gateway": "","IPAddress": "","IPPrefixLen": 0,"IPv6Gateway": "","GlobalIPv6Address": "","GlobalIPv6PrefixLen": 0,"MacAddress": "","DriverOpts": null}

}

但是,我们到容器内部查看,发现只有一个网卡。(💡这说明它甚至不能与宿主机进行网络通信,更不用说与外部网络通信了!)

$ docker exec -it t3 ifconfig

lo Link encap:Local Loopbackinet addr:127.0.0.1 Mask:255.0.0.0UP LOOPBACK RUNNING MTU:65536 Metric:1RX packets:0 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

# container

💡前面用bridge模式创建了name=t1的容器,这里新容器与其共享网络情况

创建容器

$ docker run -d --name t4 --network container:t1 busybox sleep infinity

f6072840f8958a440fac4ae895d170dc890f956a9fdce93ccac7e5dd9739ef94

查看容器网络情况,发现竟然没有配置

$ docker inspect t4 --format "{{json .NetworkSettings.Networks}}" | jq

{}

进去容器内查看,可以确认网络情况与t1一致

$ docker exec -it t1 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:17 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:1326 (1.2 KiB) TX bytes:0 (0.0 B)

$ docker exec -it t4 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:17 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:1326 (1.2 KiB) TX bytes:0 (0.0 B)

那么container关系是哪里指定的呢?看下面

$ docker inspect t4 --format "{{json .HostConfig.NetworkMode}}" | jq

"container:e44bcbfdf7aba2a951b490a2f45dc0715fe709a2af15027efd35bea59d7be92b"

$ docker inspect t1 --format "{{json .Id}}" | jq

"e44bcbfdf7aba2a951b490a2f45dc0715fe709a2af15027efd35bea59d7be92b"

可见,t4在配置中指定了container前缀和t1的容器id,表示t4与t1以container模式关联

自定义网络(user-defined网络)

一般情况下,如果两个容器都是默认(bridge)网络模式,那么通过IP,它们是可以ping通的

$ docker run -d --name b1 busybox sleep infinity

4056cf26aba1a7274a9f8fa4a1360ff8f3bfddfb22db5c1aace04079e5f458ad

$ docker exec -it b1 ifconfig eth0

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:25 errors:0 dropped:0 overruns:0 frame:0TX packets:12 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:2358 (2.3 KiB) TX bytes:682 (682.0 B)

$ docker run --rm --name t2 busybox ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

64 bytes from 172.17.0.2: seq=0 ttl=64 time=0.101 ms

64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.089 ms

64 bytes from 172.17.0.2: seq=2 ttl=64 time=0.080 ms

^C

--- 172.17.0.2 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.079/0.087/0.101 ms

⚡但是我们一般不通过IP与容器通信⚡

⚡但是我们一般不通过IP与容器通信⚡

⚡但是我们一般不通过IP与容器通信⚡

⚠️因为,docker容器内部的ip是有可能发生改变的,因此,在docker容器间通信时,我们一般使用Network namespace作为标识,而不是使用ip作为标识。

# --link(过时)

我们希望通过不变Network namespace标识找到动态的IP,从而实现与容器网络通信。(💡这里的实现就是让docker帮我们维护host文件中的容器间域名与IP的关系。)

而所谓的Network namespace标识就是--name xxx指定的容器名。

如果我们需要docker帮我们维护目标network namespace与IP的关系,那么在创建容器时,需要使用--link指定

$ docker run -d --name t4 --link t1 busybox sleep infinity

💡这样一个个维护关系是非常不方便的,如:如果我们需要增加一个link,那么要跟重新启动容器,这在生产中明显是不允许的。

因此,引出下面要介绍的自定义网络(--network)

# --network 自定义网络

和--link在创建容器时指定网络不同。--network的思路是先创建网络,然后在创建容器时指定网络。(💡这样在添加容器进入网络时,就不需要让旧有的容器进行停机、重建等操作)

docker network connect 让容器加入一个网络create 创建新的网络disconnect 让容器退出一个网络inspect 详情ls 列出prune 删除未使用网络rm 删除指定网络

e.g.

创建网络:

# 💡--driver ── Docker提供三种user-defined 网络驱动:bridge(默认)、overlay 和macvlan。overlay 和macvlan用于创建跨主机的网络

# 💡--subnet、-gateway ── 指定网段、网关

# docker network create --driver bridge --subnet 192.168.11.0/24 --gateway 192.168.11.1 -d bridge mynet

# docker network create --driver bridge my_net

$ docker network create my_net

3c9546a5f73fa4b72444bca05df6a7bd2165606c7da0683ff14e39b3f3775e3c

查看网络

$ docker network ls

NETWORK ID NAME DRIVER SCOPE

1a4165d570d1 bridge bridge local

5db86cb4951a host host local

460db8501061 my_net bridge local <---- ⚠️新创建的网络

a3dae9dcd74b none null local

$ ifconfig

br-3c9546a5f73f: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 <------ ⚠️新创建的虚拟网卡inet 172.19.0.1 netmask 255.255.0.0 broadcast 172.19.255.255inet6 fe80::42:f8ff:fe55:9703 prefixlen 64 scopeid 0x20<link>ether 02:42:f8:55:97:03 txqueuelen 0 (Ethernet)RX packets 1 bytes 28 (28.0 B)RX errors 0 dropped 0 overruns 0 frame 0TX packets 5 bytes 446 (446.0 B)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255inet6 fe80::42:81ff:febf:798a prefixlen 64 scopeid 0x20<link>ether 02:42:81:bf:79:8a txqueuelen 0 (Ethernet)RX packets 9667 bytes 739206 (739.2 KB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 9763 bytes 25993779 (25.9 MB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

创建容器、容器加入网络

# 💡--net ── 指定自定义网络

# 💡--ip ── 指定静态IP

# docker run -d --name n1 --net my_net --ip 172.19.0.8 busybox sleep infinity

$ docker run -d --name n1 --net my_net busybox sleep infinity

$ docker run -d --name n2 --net my_net busybox sleep infinity

测试直接ping容器名,ok的

$ docker exec -it n1 ping n2

PING n2 (172.19.0.3): 56 data bytes

64 bytes from 172.19.0.3: seq=0 ttl=64 time=0.101 ms

64 bytes from 172.19.0.3: seq=1 ttl=64 time=0.141 ms

64 bytes from 172.19.0.3: seq=2 ttl=64 time=0.094 ms

64 bytes from 172.19.0.3: seq=3 ttl=64 time=0.135 ms

^C

--- n2 ping statistics ---

4 packets transmitted, 4 packets received, 0% packet loss

round-trip min/avg/max = 0.094/0.117/0.141 ms

问题: 无法联通两个网络

紧跟上面的步骤: 创建了my_net网络,容器n1、n2加入到了改网络

这时候,再创建一个容器n3桥接模式

$ docker run -d --name n3 busybox sleep infinity

n3是否能与n1、n2通信呢?不能(原因是防火墙阻隔了通信)

$ docker exec -it n1 ping n3

ping: bad address 'n3'

$ docker exec -it n3 ip addr

172.17.0.2/16

$ docker exec -it n1 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2): 56 data bytes

^C

--- 172.17.0.2 ping statistics ---

5 packets transmitted, 0 packets received, 100% packet loss

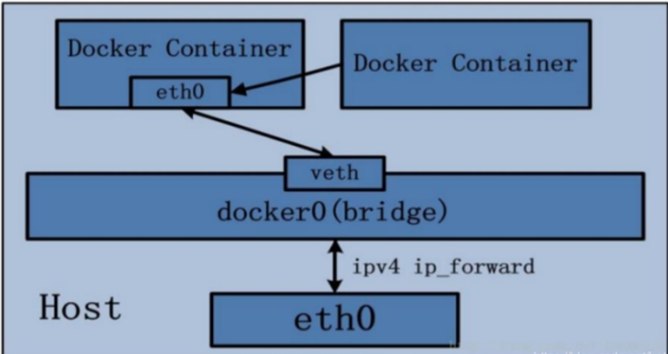

先看网络拓扑: 按拓扑看,只要host允许ip forward就能通信

host- my_net- n1- n2- docker0- n3

是否允许了ip forward呢?是允许的。

$ ip r

default via 192.168.1.1 dev ens33 proto static

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 <-------- ⚠️有注册网卡

172.19.0.0/16 dev br-3c9546a5f73f proto kernel scope link src 172.19.0.1 <-------- ⚠️有注册网卡

192.168.1.0/24 dev ens33 proto kernel scope link src 192.168.1.111$ sysctl net.ipv4.ip_forward <-------- ⚠️有允许ip forward转发

net.ipv4.ip_forward = 1

那无法ping通的原因只有防火墙了。果然: 从规则的命名DOCKER-ISOLATION可知docker在设计上就是要隔离不同的netwrok (官方文档: https://docs.docker.com/network/iptables/)

$ sudo iptables -L

Chain FORWARD (policy DROP)

target prot opt source destination

DOCKER-USER all -- anywhere anywhere

DOCKER-ISOLATION-STAGE-1 all -- anywhere anywhere

...略...Chain DOCKER (2 references)

target prot opt source destinationChain DOCKER-ISOLATION-STAGE-1 (1 references)

target prot opt source destination

DOCKER-ISOLATION-STAGE-2 all -- anywhere anywhere

DOCKER-ISOLATION-STAGE-2 all -- anywhere anywhere

RETURN all -- anywhere anywhereChain DOCKER-ISOLATION-STAGE-2 (2 references)

target prot opt source destination

DROP all -- anywhere anywhere <------- ⚠️所有流量都抛弃

DROP all -- anywhere anywhere

RETURN all -- anywhere anywhereChain DOCKER-USER (1 references)

target prot opt source destination

RETURN all -- anywhere anywhere

解决: 联通两个网络 connect

由上述内容知道,通过防火墙阻止forward后,docker两个网络之间是不联通的。

想要让两个网络联通,docker是不推荐直接改防火墙的,docker推荐使用connect指令

$ docker network connect my_net n3

$ docker exec -it n1 ping n3

PING n3 (172.19.0.4): 56 data bytes

64 bytes from 172.19.0.4: seq=0 ttl=64 time=0.143 ms

64 bytes from 172.19.0.4: seq=1 ttl=64 time=0.150 ms

^C

--- n3 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.143/0.146/0.150 ms

connect命令实际上做了什么?实际上在目标容器中添加了一个虚拟网卡,直连目标网络

$ docker exec -it n1 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:13:00:02inet addr:172.19.0.2 Bcast:172.19.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:44 errors:0 dropped:0 overruns:0 frame:0TX packets:27 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:3468 (3.3 KiB) TX bytes:1942 (1.8 KiB)$ docker exec -it n3 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:16 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:1256 (1.2 KiB) TX bytes:0 (0.0 B)eth1 Link encap:Ethernet HWaddr 02:42:AC:13:00:04 <------ ⚠️新加的虚拟网卡inet addr:172.19.0.4 Bcast:172.19.255.255 Mask:255.255.0.0UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:14 errors:0 dropped:0 overruns:0 frame:0TX packets:4 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:1076 (1.0 KiB) TX bytes:280 (280.0 B)

网络拓扑

host(防火墙forward drop)- my_net- n1- n2- n3 <---- ⚠️新添加- docker0- n3